Artificial Intelligence: Challenging the Status Quo of Jurisprudence

Covered by LiveLaw: https://www.livelaw.in/columns/artificial-intelligence-jurisprudence-175193

Jurisprudence has always faced challenges by innovations, socio-economic developments, and changes in the political landscape. However, as various aspects of life are increasingly involving artificial intelligence, jurisprudence now faces a few of the most complex challenges. The legal fraternity requires much better acquaintance with the technical space as the new policies that they will draw will directly influence the products developed by engineers. To understand the parallelism which one can draw between Artificial Intelligence and Law, let’s walk through a few autonomous systems where AI is already confronting the legal field.

Who will be liable if a Tesla hits someone?

Constant advancements across a spectrum of technologies brought autonomous cars to reality straight out of sci-fi movies. Clinging to these innovations were challenges for lawmakers. A simple question like – Who’d be liable if a vehicle leads to the death of a pedestrian is easy to answer when we consider our usual human-driven cars. But what about a car that has no driver? It may still be easier to determine civil liability by embracing the doctrine of vicarious liability, but as there is no parallel to this doctrine in criminal law, it makes criminal liability more difficult to adjudicate. In usual circumstances, a driver can be charged with homicide but in a car that is driving itself who’ll be liable – the car owner or the manufacturer, the software or the hardware vendor or perhaps would all the passengers be collectively liable? Presently across the world this and other similar questions have baffled lawmakers and researchers and until we have a solution for that, utilizing the full potential of these innovations will pose challenges. For instance, however well an autonomous car performs, presently no country allows them to run on the roads by themselves. In the United States, where trials for most of the autonomous cars have taken place, laws mandate the presence of a human in a moving vehicle where they can control the wheel, which puts all the liability on this operator in case of an accident. This absence of proper legislation mandates the additional phrase “autonomous feature requires active driver supervision” in the Terms and Conditions of the autonomous operation of such cars. On one end, Artificial Intelligence is enabling rapid innovations challenging the laws and, on the other end, the absence of appropriate laws is putting restrictions on how these innovations can be used.

Constant advancements across a spectrum of technologies brought autonomous cars to reality straight out of sci-fi movies. Clinging to these innovations were challenges for lawmakers. A simple question like – Who’d be liable if a vehicle leads to the death of a pedestrian is easy to answer when we consider our usual human-driven cars. But what about a car that has no driver? It may still be easier to determine civil liability by embracing the doctrine of vicarious liability, but as there is no parallel to this doctrine in criminal law, it makes criminal liability more difficult to adjudicate. In usual circumstances, a driver can be charged with homicide but in a car that is driving itself who’ll be liable – the car owner or the manufacturer, the software or the hardware vendor or perhaps would all the passengers be collectively liable? Presently across the world this and other similar questions have baffled lawmakers and researchers and until we have a solution for that, utilizing the full potential of these innovations will pose challenges. For instance, however well an autonomous car performs, presently no country allows them to run on the roads by themselves. In the United States, where trials for most of the autonomous cars have taken place, laws mandate the presence of a human in a moving vehicle where they can control the wheel, which puts all the liability on this operator in case of an accident. This absence of proper legislation mandates the additional phrase “autonomous feature requires active driver supervision” in the Terms and Conditions of the autonomous operation of such cars. On one end, Artificial Intelligence is enabling rapid innovations challenging the laws and, on the other end, the absence of appropriate laws is putting restrictions on how these innovations can be used.

Judiciary using AI: What can go wrong?

The legal fraternity has started recognising the potential of AI in legal research, but they also acknowledge the risks of integrating these systems in decision-making. The term “garbage in, garbage out” applies aptly to artificial intelligence, which means that a bias in the data set used to train a model will result in a bias in the decision-making. Judiciary is a safeguard to citizen’s rights, especially when the executive shows a disregard towards them, and hence the repercussions of a bias in decision making are catastrophic. Not to mention the potential of having an intentional bias since many influential people have a lot to lose and a lot to gain by swaying the legal proceedings in their favour. An interesting point to note is the principal difference between the bias created by these AI systems and the natural bias because of human behaviour. The bias in AI systems can be an “organised” bias that can infiltrate the judiciary at every level, silently influencing the judiciary. Besides, there is an additional threat to Judicial Independence because of the involvement of the tech giants who’ll be developing these AI-powered systems. These potential threats are the reasons why most legal experts are against the idea of involving AI in decision making and suggest limiting its use to legal research. But who’s to say bias cannot pop in there? Just like social media can influence your opinion by showing biased content, so can these AI-driven legal research tools. Judges too read white papers, judgements, precedents while adjudicating and these research tools can sway their opinion, for instance by showing only those research items which favours one side. Additionally, if these tools become a single point of failure, even a small bias can create an avalanche of issues.

Criminalising Algorithms

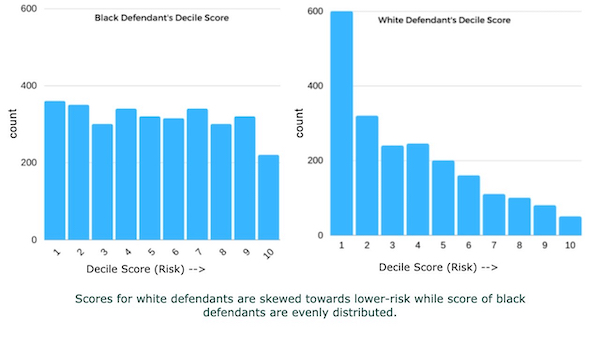

Principal examples of AI in adjudication are the criminalising algorithm, especially COMPAS, which is actively used in Florida to predict the risk of a convict to again commit a crime and hence affecting the offender’s sentence. Researchers have found that these algorithms are twice more likely to flag black defendants as future criminals [Read Further]. Therefore a common contention among the legal experts is that since undertrials cannot determine the reasoning of these algorithms, the system denies due process. Therefore we need to ensure a sufficiently transparent system that provides comprehensive visibility in all the aspects of the system, such as algorithms powering the system, the data used to train these algorithms, the shortcomings of the algorithms. A mechanism to bring transparency in this upcoming ecosystem is to open source all the technologies and the data models which power these systems and accept scrutiny from the public. Open-sourcing these technologies will ensure that no single player can influence the apparatus as any Judge would be able to employ these softwares directly from the open-sourced code, trained by an equally important open-sourced data set.

Principal examples of AI in adjudication are the criminalising algorithm, especially COMPAS, which is actively used in Florida to predict the risk of a convict to again commit a crime and hence affecting the offender’s sentence. Researchers have found that these algorithms are twice more likely to flag black defendants as future criminals [Read Further]. Therefore a common contention among the legal experts is that since undertrials cannot determine the reasoning of these algorithms, the system denies due process. Therefore we need to ensure a sufficiently transparent system that provides comprehensive visibility in all the aspects of the system, such as algorithms powering the system, the data used to train these algorithms, the shortcomings of the algorithms. A mechanism to bring transparency in this upcoming ecosystem is to open source all the technologies and the data models which power these systems and accept scrutiny from the public. Open-sourcing these technologies will ensure that no single player can influence the apparatus as any Judge would be able to employ these softwares directly from the open-sourced code, trained by an equally important open-sourced data set.

The Vague Judicial Boundaries

Another important factor to consider is that judicial boundaries are hazy. There have been numerous instances where constitutional courts have brought up precedents in the context of the evolving society and a moral sense of what is correct. A prime example is India’s 3 Judges case where the Judiciary derived collegium (voting) system for the selection of Judges with the vision of ensuring Judicial independence even though the constitution doesn’t have a provision for this. Or consider the Puttaswamy judgement, which declared the Right to Privacy as a fundamental right despite no mention of such right in the Indian constitution. Due to these subjective boundaries, various such judgements are arguably called by some as judicial overreach. Asserting that it’s difficult to train machines to understand the societal aspects and to have a vision for the evolving society will be an understatement.

Having said the above, among all the areas that artificial intelligence can revolutionize, judicial systems would be one of the most critical ones and would require the highest amount of scrutiny, caution, perfection and precision

Will liability principles restrict the extent of AI in healthcare?

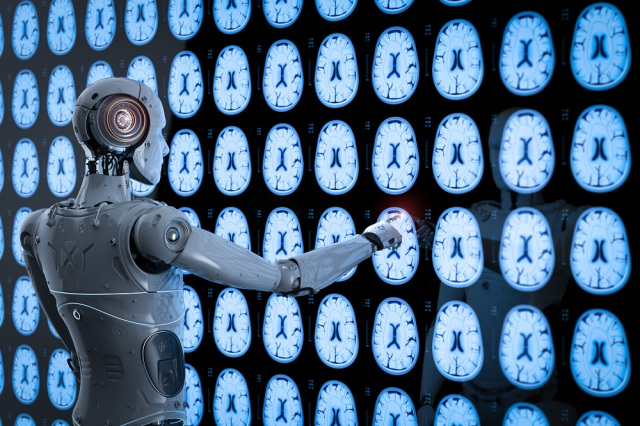

A critical space where AI has the potential to affect millions of lives is a clinical diagnosis. And on top of that apparatus which analyse these diagnoses to devise treatment plans. These systems raise a vital question of informed consent. Would the physician be legally required to inform the patient about the usage of AI in their treatment? Additionally, since no system is infallible, what if an AI-powered software provides an incorrect treatment recommendation. Or what if a tool (CORTI software) incorrectly decides that a patient is not having a cardiac arrest, hence delaying the treatment? These scenarios bring up significant concerns with the current liability regime, such as – should the hospital be vicariously liable for a mistake by an AI-powered tool just like it would have been, had the human employee made an error?

A critical space where AI has the potential to affect millions of lives is a clinical diagnosis. And on top of that apparatus which analyse these diagnoses to devise treatment plans. These systems raise a vital question of informed consent. Would the physician be legally required to inform the patient about the usage of AI in their treatment? Additionally, since no system is infallible, what if an AI-powered software provides an incorrect treatment recommendation. Or what if a tool (CORTI software) incorrectly decides that a patient is not having a cardiac arrest, hence delaying the treatment? These scenarios bring up significant concerns with the current liability regime, such as – should the hospital be vicariously liable for a mistake by an AI-powered tool just like it would have been, had the human employee made an error?

These concerns demand answers from the legislators. Therefore, lobbies rooting for the techno-medical space, especially in the European Union and the United States, are demanding policies to govern these eventualities. To resolve these baffling issues, the legislators need to balance innovation with reasonability and are required to come up with an optimal liability principle.

Recent Developments

Since it’s a budding domain, there is a rudimentary understanding of the liability principles pertaining to techno-medical space. Hence the few legislative pieces we have are nascent. An example of this is the report published by the European Commission in 2020 [Ref] showcases that Europe is not ready yet for the new liability challenges that AI-backed medical systems will bring with them.

To understand the status of US policies consider the above example where an autonomous system comes up with an incorrect treatment plan (something which a non-AI practitioner would not have arrived at) which is adopted by the clinician eventually harming the patient. In such a scenario, the US’s FDA rules entail that although the practitioner entrusted an FDA-authorized software, the practitioner is most likely to be held for medical malpractice since FDA has termed these softwares as CDS i.e Clinically Decision Support Software. This enabled them to put these “support” software under a wider term of “tools”. What this means is that these “tools” are merely enablers and an informed final call needs to be taken by the practitioner and is hence ultimately liable for the recourse.

Challenge With Black-Boxing of Algorithms

However, there is a challenge for practitioners if they need to take the final call. The challenge is what if engineers “black-box” these tools, disabling the practitioners from understanding the rationale behind a decision. Hence there is a need to ensure that health care professionals are enabled to independently review the basis for such recommendations that such software presents. This would allow practitioners to use these CDS softwares only as confirmatory tools assisting the existing decision-making processes. And for this very reason, FDA law enforces that

the software developer should describe the underlying data used to develop the algorithm and should include plain language descriptions of the logic or rationale used by an algorithm to render a recommendation.

A critical implication of this phrase is that since the medical practitioners will remain on the hook for the resulting liability, they would be discouraged from entrusting the CDS. But what if we learn that had the practitioners relied on these CDS in all cases, we would have saved more lives? Then we would need to revisit the above liability principle.

Despite the risks & the liability challenges, can we imagine a future where the use of AI-based technology becomes the standard of care, and thus the choice not to use such technology would subject the clinician to liability? This space requires legislators, technologists, legal experts & medical experts among many others to work together and come up with an optimal liability principle adhering to the doctrine of utilitarianism.

The Dilemma of Objectifying Life‘s Value

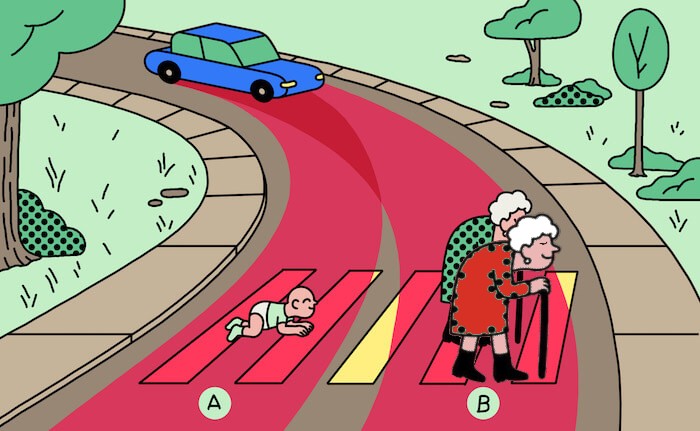

Consider a situation where an autonomous vehicle faces a conundrum of either continuing on its lane B (option 1), endangering the life of an old couple or switch to lane A (option 2), endangering an adolescent. “If” all the lives are considered equally important, then the system should switch to lane A, endangering one life instead of two. But, is the count of lives the only important factor here? For instance, could we say that since the total life years left in option-1 are probably less than in option-2, option-1 might be a better option? What about in situations where everything else remains the same, and the dilemma is to choose between a rich man & a poor man or a male & a female or other such enigmas? It is tough to derive an objective solution in such scenarios, but the autonomous system will require to take a binary call. One path is to adopt the idea of utilitarianism, but the model has the potential to punish a poor labourer walking on the footpath for an influential person who is jaywalking.

Consider a situation where an autonomous vehicle faces a conundrum of either continuing on its lane B (option 1), endangering the life of an old couple or switch to lane A (option 2), endangering an adolescent. “If” all the lives are considered equally important, then the system should switch to lane A, endangering one life instead of two. But, is the count of lives the only important factor here? For instance, could we say that since the total life years left in option-1 are probably less than in option-2, option-1 might be a better option? What about in situations where everything else remains the same, and the dilemma is to choose between a rich man & a poor man or a male & a female or other such enigmas? It is tough to derive an objective solution in such scenarios, but the autonomous system will require to take a binary call. One path is to adopt the idea of utilitarianism, but the model has the potential to punish a poor labourer walking on the footpath for an influential person who is jaywalking.

Should Engineers Decide Who Would Live?

Presently, private companies take these calls on their conscience. For instance, Mercedes-Benz revealed that their autonomous vehicle would always put the driver first [Ref]. So if it gets a choice of saving any pedestrian or ramming into a tree, thereby endangering the driver, the system will choose to protect the driver. Even though from the product perspective, it is a good rationale, but since these decisions affect society at large, private companies cannot be left alone to take a call on this. These dilemmas expect legislators to step in and formulate a balanced model to govern these complex issues. It might even turn out that to resolve these perplexities, legislators devise a model to put an objective value to an individual’s life.

Autonomous War Machines

The challenge with autonomous vehicles was to decide whom to save. With an AI-powered war machine, the challenge is to figure out – “whom to kill”. The space of autonomous war machines is rapidly evolving. For instance, we have the Pegasus Jet which is fully capable of identifying threats and taking them down or Talon which is an automated cannon. These machines too, will challenge the laws, policies and precedents settled across centuries of Jurisprudence. There exist limited legislative pieces to preside over these aspects. Most reflect the hesitancy of legislators to rely on these machines. For example, US laws ensure that a kill decision should always involve human approval. Apart from the legislative hesitancy, there is little consciousness on such matters within the legal fraternity. Hence human intervention in these machines is here to stay until we have data to convince otherwise and comprehensive legal provisions to legislate this domain.

So, what’s next?

We have seen how the rapid evolution in artificial intelligence tests the very fabric of jurisprudence. The superior level of autonomy in systems demands discussions on the moral hazards & the benefits of establishing an “autonomous system” as a “legal personality”. They additionally also require us to revisit the subject of strict liability or even the possibility to shift the focus on compensation, even without incarceration.

Further, the lack of transparency in the algorithms running these autonomous systems increases the risk & hence gives way to scepticism. The world has already seen many examples where people were refused loans, denied jobs, or were put on no-fly lists without an explanation because AI took the decision. We have also seen evidence of data bias like Google’s fiasco of identifying blacks as Gorillas [Ref] or the racial prejudice in cropping functionality of Twitter [Ref].

The Changing Focus

Such incidents are not just a failure of the technology field but also of the legislative bodies as they failed to prevent them because of the absence of any oversight. These events bring focus to “technology law”. And when it comes to “technology law”, the EU, US, UK and few others model policies that influence the entire globe. We have evidence indicating a shift in their focus to bring better oversight in this techno-legal space, such as the draft guidance of White House listing principles to develop AI applications [Ref] and an algorithmic transparency standard by IEEE (IEEE P7001)[Ref] or the ethics guidelines by the European Commission for trustworthy AI.

This shift in focus has been very recent & hence there is a lot of ground to cover to claim that the field is well legislated. Besides, it takes decades for laws to mature & hence there is an urgency in increasing the focus. Moreover, because of the exponential advancements in artificial intelligence, both the quantity and complexity of the challenges for jurisprudence will rapidly increase. The legislators will require to constantly lift the veil of ambiguity and bring thoughtful policies which balance innovation, rationale and most importantly, human rights.